Last Updated on –

August 22, 2025

AI

Ethical Implications of AI: Real-World Examples That Demand Attention

Rohit Gajjam

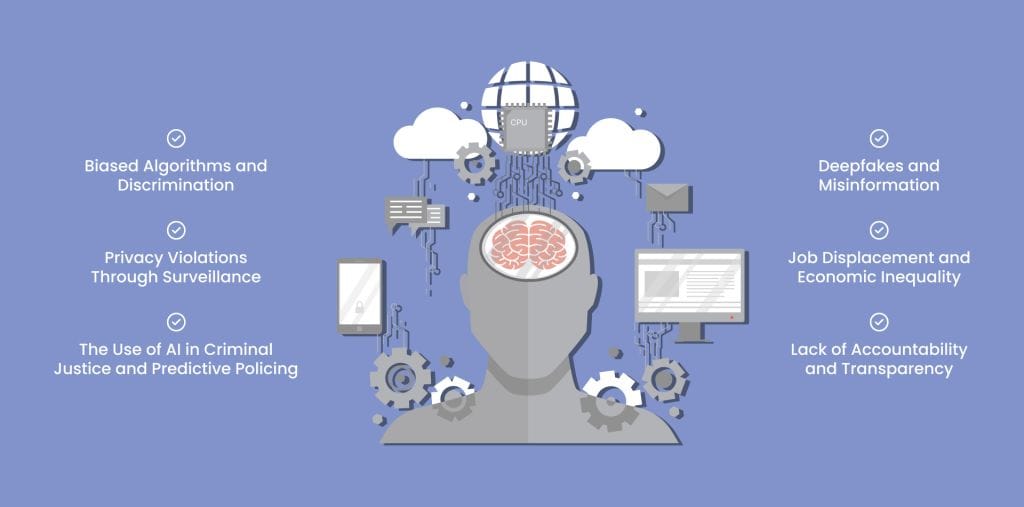

Artificial Intelligence (AI) is no longer science fiction. From personalized recommendations on Netflix to facial recognition in smartphones and predictive policing, AI is woven into our daily lives. As AI technology advances, it raises increasing ethical concerns, such as privacy violations, bias, accountability, and the safeguarding of human rights.

This article explores the ethical dilemmas surrounding AI by highlighting real-world cases that have triggered global debates and shaped policy-making decisions. Whether you’re a developer, policymaker, or regular user, it’s crucial to understand these challenges in order to help build a more responsible digital future.

1. Biased Algorithms and Discrimination

One of the most discussed ethical implications of AI is algorithmic bias. AI systems learn from historical data. If the data contains bias, the AI will not only reflect it but may also intensify it.

Amazon’s AI Recruiting Tool

Amazon discontinued its AI-powered recruitment tool after realizing it showed bias against female applicants. The system was trained using resumes collected over a span of 10 years, the majority of which were submitted by men. This led the AI to favor male applicants over female ones. This is a classic case where the ethical implications of AI directly impacted employment fairness.

2. Privacy Violations Through Surveillance

AI-powered surveillance tools can detect, track, and analyze individuals in real time. Although these tools are commonly employed for security purposes, they also pose serious ethical issues related to privacy and consent.

China’s Social Credit System

China uses an AI-based social credit system to monitor and rate citizens according to their behavior and social conduct. Though designed to foster trust, it has instead enabled intense surveillance and strict control, where negative behavior can lead to consequences like travel bans. This system is frequently referenced in discussions about the ethical concerns surrounding the use of AI in governance.

3. The Use of AI in Criminal Justice and Predictive Policing

AI is increasingly used in the justice system, from predicting crime hotspots to determining bail and sentencing. However, such systems often inherit systemic biases from the data they’re trained on.

COMPAS Algorithm in the U.S.

The COMPAS tool used in U.S. courts to assess a defendant’s risk of reoffending was found to be racially biased. A 2016 ProPublica investigation revealed that the algorithm was more likely to flag Black defendants as high-risk compared to white defendants for similar offenses. The ethical implications of AI in law enforcement can have life-altering consequences for individuals, particularly in marginalized communities.

4. Deepfakes and Misinformation

The emergence of AI-powered deepfake technology has opened up a new era of misinformation. These hyper-realistic fake videos and audio clips can manipulate public perception and damage reputations.

Deepfake Video of Ukrainian President

In 2022, a deepfake video of Ukrainian President Volodymyr Zelensky urging soldiers to surrender went viral during Russia’s invasion. Although it was swiftly disproven, the harm had already been inflicted, underscoring how the ethical challenges of AI can endanger both national security and the integrity of truth.

5. Job Displacement and Economic Inequality

While AI can enhance productivity, it also threatens to automate millions of jobs, particularly in manufacturing, retail, and transportation. This raises concerns about widening inequality and social disruption.

Uber’s Push Toward Autonomous Vehicles

Uber’s investment into self-driving technology was partly driven by the goal of reducing its need for human drivers. Though the company later scaled back, it showcased how corporations are exploring AI to cut labor costs, potentially at the expense of millions of workers. This economic angle is another dimension of the ethical implications of AI that societies must confront.

6. Lack of Accountability and Transparency

One of the biggest ethical implications of AI is the “black box” problem, where AI decisions are made without clear explanations. This lack of transparency becomes a major concern in high-stakes sectors like healthcare, finance, and law.

Apple Card Credit Limit Controversy

In 2019, Apple’s AI-driven credit card offered drastically different credit limits to men and women, even when financial profiles were similar. The opaque nature of the algorithm left consumers confused and regulators alarmed. It underscored the need for explainable AI and greater accountability.

Why Ethical AI Matters

AI is powerful, but with great power comes great responsibility. Without proper ethical guidelines, AI can cause more harm than good. Governments, technology firms, and civil society need to work together to:

- Ensure transparency in AI algorithms

- Mandate fairness and eliminate bias in training data

- Protect individual privacy and freedom

- Develop robust frameworks for accountability

FAQ’S

What are the main ethical implications of AI?

The primary ethical concerns include algorithmic bias, invasion of privacy, limited transparency, displacement of jobs, and the potential misuse in surveillance and warfare.

Can AI ever be truly unbiased?

While total neutrality is difficult, ethical AI practices—like diverse training data and transparent design—can significantly reduce bias and improve fairness.

Why is explainability important in AI systems?

Explainability allows AI decisions to be transparent and open to scrutiny, which is especially important in sensitive fields such as healthcare, finance, and justice. It builds trust and accountability.

How do AI surveillance tools violate privacy?

These tools frequently collect and monitor sensitive information without individuals’ knowledge or approval, which poses major ethical and legal challenges.

Conclusion

As AI continues to revolutionize industries, the ethical implications of AI are becoming too significant to ignore. Examples from real life, such as biased hiring, policing, and surveillance, highlight the urgent need for responsible and ethical AI practices. Ethical AI is not optional. It is essential for creating a fair, inclusive and secure future in technology.

Stakeholders across sectors must collaborate to ensure AI development aligns with human values. By addressing bias, ensuring transparency, and promoting accountability, we can turn AI from a potential risk into a transformative force for good.